Kubernetes RBAC: Deep Dive into Security and Best Practices

This guide explores the challenges of RBAC implementation, best practices for managing RBAC in Kubernetes,...

Jan 17, 2022

For the last decade, AWS has dominated the cloud computing space with a plethora of cloud services. One of AWS’ great innovations was AWS Fargate, their first containers as a service (CaaS) offering.

Prior to the introduction of Fargate, those building in the cloud were forced to choose between IaaS paradigm-focused containers or FaaS-focused serverless functions. However, with AWS Fargate, users could take advantage of running managed containers with a serverless pay-as-you-go model and automated scalability—all while avoiding the restrictions of AWS Lambda functions.

Unfortunately, there are no silver bullets in the world of software. Every solution has its pros and cons, and Fargate is no exception. The abstraction of the underlying architecture, which is usually the benefit, also results in observability and security challenges.

In this article, I’ll discuss how Fargate works while exploring its security-related issues and the possible solutions.

AWS Fargate is a serverless container compute engine and was announced to the world at re: Invent in 2017. However, it only supported ECS at the time. Amazon EKS support became available later, in 2019. Thanks to the serverless nature of AWS Fargate, setting up and managing Kubernetes infrastructure became much easier.

Fargate was developed to mitigate the pain associated with managing EC2 clusters from Amazon EKS. Cloud adopters could benefit from a pod-based cost model without dealing with configurations and issues related to scaling and patching. This is because Fargate with EKS offers a managed data and control plane.

Basically, the underlying layer of EC2 cluster nodes is abstracted away from the cloud adopter. These pods are then automatically deployed to a set of AWS-managed VMs and are connected to the rest of the Amazon VPC through managed ENI—thus eliminating your EC2 set-up woes.

Setting up AWS Fargate for Kubernetes primarily entails defining Fargate profiles. This is the administrative component where you can decide which pods to run on the Fargate engine. Pods are mapped to the Fargate profile’s “selectors,” which include namespace and other labels. Hence, depending on the namespace of the pods and other labels are then run on the profiles. This is how Fargate then absorbs the responsibility of how pods are run after they are scheduled.

Naturally, there are more components to configure beyond the ones mentioned above. But overall, we can appreciate the sophistication of AWS Fargate as a CaaS service. The flexibility, control, and power of Kubernetes is now packaged in a serverless form of compute service. This is advantageous for building and running Kubernetes applications in the cloud because there are no more nodes to manage.

However, the fact that there are no more nodes to manage also means that access to the actual nodes is restricted. This greatly limits our ability to instrument security at the core levels of the infrastructure. As mentioned in the introduction, there is no silver bullet in tech, but there are ways to mitigate the issues. I’ll cover some of these solutions in the coming sections.

Because the underlying VMs are abstracted away (along with the burden of managing most of the EC2 instances) those building cloud applications do not have access to the hosts running them. Even though this has benefits in configuration and management, there are major drawbacks to consider.

One drawback is the lack of root privilege for applications running using AWS Fargate. This handicaps the cloud adopter’s ability to use host agent forms of attachment for security instrumentation.

Host agent forms of attachment are components with privileges present in the worker nodes of applications being instrumented. In general, these agents are considered invasive, and disrupting them can lead to disruptions in the worker node itself. That’s why, over the past few years, many have turned to eBPF as a solution.

Though most of the Linux kernel tracing capabilities are around for many years, eBPF has recently gained popularity as a less invasive approach for instrumentation. However, this method still needs to be run on the host, thus hindering it for Kubernetes applications running on AWS Fargate. And Fargate’s issues with security don’t end with its inability to instrument eBPF. It must be noted that future kernels are planned to support POD compartmentalization of eBPF thus avoiding the need to run it on host level. However, it will take years for technology stacks and the ecosystem to take advantage of this

Many projects are built on APIs, an agentless form of the attachment point. In Kubernetes, this refers to the Kubernetes API and audit logs. Choosing this attachment form is not an alternative to the agent-based method, but compliments it on different system layers.

However, the Kubernetes API integration does not give enough means for runtime security compared to what eBPF is known for. Security-wise, Kubernetes API integration only gives you a specific segment of information. Additionally, due to the lack of access to the nodes, access is somewhat restricted to the Kubernetes API inside the Fargate containers.

Considering how security instrumentation is performed, these APIs may be an integral part of security management for the Kubernetes application. Nevertheless, With these drawbacks in mind, the cloud community in general—and AWS specifically—are moving toward new and innovative solutions for resolving these issues.

As mentioned above, one of the fundamental issues in Fargate is the lack of support for agents and some drawbacks with API-based attachment point approaches to instrumentation. eBPF would be an ideal solution but not available for Kubernetes applications in AWS Fargate. There are some alternatives, however, and the major solutions are explored below.

Ptrace is a powerful kernel feature in the Linux system. The functionality allows for a process with the right privileges to inspect, control, and modify another process. Additionally, it intercepts kernel calls directly and can thus be considered accurate. Given that this has been one of the more effective ways to work around Fargate’s limitations, AWS recently released ptrace support in AWS Fargate.

Unfortunately, though effective, ptrace is also very inefficient. This is because leveraging PTRACE_SYSCALL is the equivalent of setting up a debugger and results in high overhead due to OS context switching. Therefore, even though ptrace does offer some respite, it still has pitfalls of its own.

An application-level agent involves putting an attachment point in the container itself, and it may offer the best solution yet. It is a workload agent that is running in the same layer as the application. Istio is an example of this approach where Istio runs alongside your application.

The benefit of application-level agent attachments is that they do not require access to the worker nodes like the host agent or agentless attachments do. This solution aims to tackle security from the application side instead of the underlying architecture. Considering the restriction that AWS Fargate poses, this method of attachment is the most effective for providing the required level of security control.

As mentioned earlier, every solution has a drawback. Luckily, this one is manageable. In short, adopters may have trouble scaling the solution. Because this form of attachment involves running the agent in the workload itself, if the developer wants to remove the agent, they have to re-adjust the application configurations and connections. Simply removing the agent may disrupt the application performance, as the agent was responsible for security monitoring during the running of the application.

Even so, this method offers greater coverage in terms of security instrumentation and optimization than other solutions, such as ptrace. This is primarily because the other forms of attachment require node access, which has deliberately been abstracted away to achieve the serverless architecture that Fargate promises.

AWS Fargate has brought many benefits to cloud computing by enabling serverless Kubernetes management. As a result, cloud adopters are finally free from the woes of managing EC2 instances because the underlying nodes are abstracted away to the cloud vendor.

However, this asset comes with challenges. This design limits a developer’s access to the nodes and underlying architecture. As a result, instrumentation for security becomes difficult. While there have been some developments in solutions to mitigate these pains, such as ptrace, they each come with their own pain points.

This leaves application-side attachment as the best solution. Rather than trying to access inaccessible nodes, this method approaches security through the application itself. Read this article Don’t get attached to your attachment! to learn more

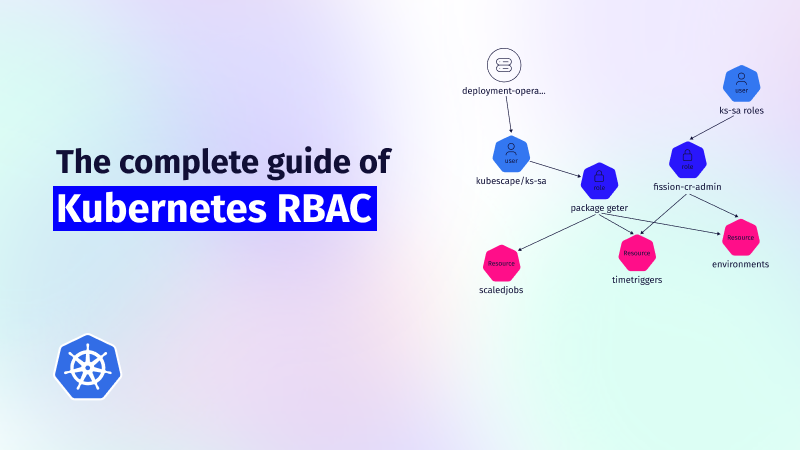

This guide explores the challenges of RBAC implementation, best practices for managing RBAC in Kubernetes,...

Role-Based Access Control (RBAC) is important for managing permissions in Kubernetes environments, ensuring that users...

In the dynamic world of Kubernetes, container orchestration is just the tip of the iceberg....