Kubernetes RBAC: Deep Dive into Security and Best Practices

This guide explores the challenges of RBAC implementation, best practices for managing RBAC in Kubernetes,...

Dec 22, 2021

Kubernetes (K8s) secrets are the native sources for storing and managing sensitive data, like passwords, cloud access keys, or authentication tokens. You need to distribute this information across your Kubernetes clusters and protect it at the same time. When sending your password to each node in your cluster, it’s critical to ensure that only authorized entities—users, services, or workloads—are able to access it.

The building blocks of Kubernetes computation are pods made up of containers. You can bake your sensitive passwords into container images or configure them as part of pod definitions. The more secure and Kubernetes-native approach uses secret objects and introduces them in pod specifications (e.g., a file or environment variable).

In the next section, I introduce the protection layers Kubernetes offers.

Secrets are native Kubernetes resources, and Kubernetes provides a basic set of protection layers for them. These protection measures are developed over time and can be grouped as follows:

Since pods and secrets are separate objects, there’s less risk of exposing secrets during the pod lifecycle. Therefore, as a first security measure, if you’re passing sensitive information as environment variables to pods, you should separate them and create them as secret objects. As secrets are separate resources, you can also address them differently in RBAC and limit access.

The second set of security features comes from kubelet—the agent that runs on each of the K8s nodesand interacts with the container in runtime. Data in the secrets are used inside containers, and they should be available on the node where the containers run. However, Kubernetes sends the secrets to the node only if the node has a pod requiring the secret. In addition, kubelet stores the secret data in temporary file storage (tmpfs) instead of in disks. When the pod isdeleted or rescheduled from a node, kubelet also clears the secret from its local copy.

Numerous pods are running on a node, but only the pods can access secrets specified in their definition.On top of that, pods consist of several containers, but the secrets are only mounted to the containers that request the secret in its volumeMounts specification. So, the pod architecture minimizes the risk of secrets being exposed between other pods and containers.

Secrets are created and accessed over the Kubernetes API. Accordingly, Kubernetes protects the communication between users, the API server, and kubelet through SSL/TLS.

Like all other Kubernetesre sources, secrets are also stored in etcd. This means it’s possible to reach secrets when you access the etcd running in your control plane. The protection measure provided by Kubernetes is to use encryption at rest for the secret data. You can check the official documentation to configure encryption of secret data at rest.

These protection measures ensure that secrets are separated from other Kubernetes resources, accessed and stored securely. Still, Kubernetes isn’t a bulletproof security system and comes with some risks.

When it comes to secrets, the potential risks are identified and open for mitigation by third-party tools and extensions.The risks can be grouped as follows:

As the ultimate location where secrets are stored, etcd must be encrypted and well protected. You should enable data encryption and limit access to the etcd clusters. And of course,peer-to-peer communication between etcd nodes should use SSL/TLS.

Youcan create secret objects using JSON or YAML manifest files. Just make sure the files aren’t checked into a source repository or shared.

When the secrets are loaded in your application, be careful about logging or transmitting them to untrusted parties.

If a user has sufficient permissions to create a pod that mounts and uses a secret, the secret’s value will also be visible to them. Even if you set RBAC Kubernetes rules to limit access to secrets, the user could start a pod that exposes the secret by sending thesecret outside or writing it into pod logs. Just think about the unwanted association between secrets and their consumers when designing your security concept.

Containers run on thenode, and it’s possible to retrieve any secret from the Kubernetes API server if you’re root on the node. This is done by impersonating kubelet and accessing Kubernetes API. So be careful and be sure to protect your nodes as well as your Kubernetes control planes.

Next up, I cover open-source,cloud-specific, and more inclusive tools to mitigate the risks above and protect Kubernetes secrets.

There are various tools and strategies that can take your Kubernetes secrets security to the next level. In this section, Icover the available tools as well as their benefits and drawbacks to help you choose the right one (or more than one) for designing your clusters.

Cloud providers like GCP and AWS have their own cloud key management systems (KMS), a centralized cloud service through which you can create and manage keys to perform cryptographic operations. These systems can also serve as an additional security layer for the encryption of Kubernetes secrets at the application layer.

The greatest benefit of KMS, of course, is its ability to protect against attackers who have gained access to a copy of etcd. It can, however, be a little tricky to integrate, so be sure to first do your research on how to integrate KMS into your cluster and ensure that it conforms with your security operations.On the other hand, there are two major drawbacks to KMS: firstly, you need to change your application code to work with KMS providers; secondly, it relies on authenticating the node, so if the attackers are already on the node, they can access your data easily.

Sealed secrets help mitigate the risks related to CaC and leaking secrets into source-code repositories by encrypting secrets locally into a format that is safe to store and publish. When the cluster needs to use the secret, it is decrypted only by the controller running in the cluster.

This approach requires installing a controller to the cluster and a client tool called kubeseal to the local workstation. If you only care about protecting your secret objects as a file, it’s pretty straightforward to install and operate.However, you need to be careful about sealed secrets since they only protect against theft from the source code repository. For instance, if you check out your keys and secrets to your workstation during installation, they will be in danger.

Helm is a useful tool for installing complex applications to clusters, including their configuration and sensitive data. However, the problem of leaking secret values into source code repositories still applies to Helm charts. Operations teams maintain the Helm charts in source code repositories with value files separately or in the same location.

Helm secrets is a Helm plugin for encrypting secrets through Mozilla’s open source SOPS project. It is also an extendable platform that supports external key management systems like Google Cloud KMS and AWS KMS. If you’re deploying your applications with Helm and storing sensitive data in your values, you’ll want to be sure to secure your Helm chart values. Similar to sealed secrets, the Helm secrets plugin only protects the sensitive data in the source code repository. It does not provide any protection for the storage of secrets in the Kubernetes API or etcd.

ARMO is a comprehensive, all-in-one method for protecting your secrets and their lifecycle in the source code repository and cluster. It provides all the protection aspects of the tools above plus additional benefits including:

This end-to-end approach ensures that the secret reading at the pod level, such as access to anode and reading pod memory, is not compromised. The process can be divided into two stages:

In this stage, you encrypt the secret files and define an encryption policy allowing the pod to decrypt the file in runtime.

The pod is deployed with the encrypted secret file. Then the workload, namely the pod, is signed with ARMO’s agent on the node. The key is kept in the DRT,with the patented-secret-protection-mechanism, to decrypt the secret file and is handed over to the pod.

ARMO covers all the risks cited and provides far more features than other Kubernetes security tools to ensure your secrets are really protected.

To sum up, Kubernetes secrets are the cloud-native way of storing and managing sensitive information in the cloud. While Kubernetes offers some protection, many open risks remain to consider. You can mitigate these risks by incorporating one or more tools in your operations stack.

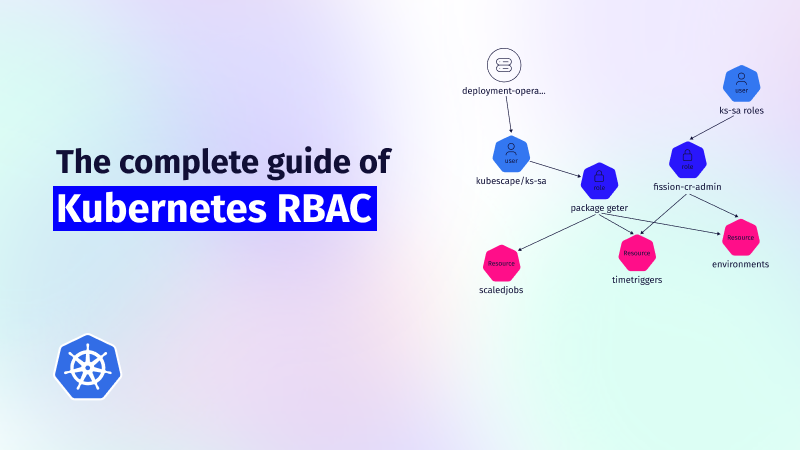

This guide explores the challenges of RBAC implementation, best practices for managing RBAC in Kubernetes,...

Role-Based Access Control (RBAC) is important for managing permissions in Kubernetes environments, ensuring that users...

In the dynamic world of Kubernetes, container orchestration is just the tip of the iceberg....