Kubernetes

What is Kubernetes?

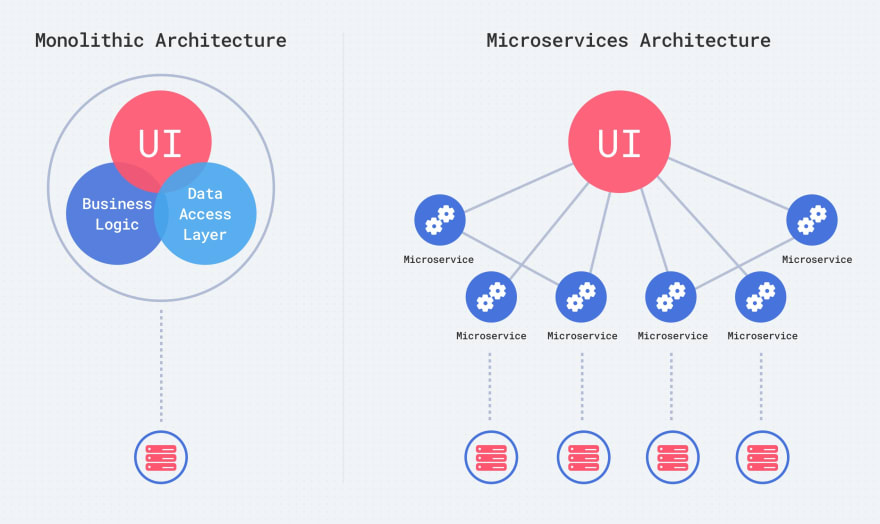

Today, a conversation about modernizing a legacy application or developing new capabilities will inevitably bring up containers and microservices. These have become the buzzwords de jure in software development circles in the last few years, and for a good reason. Microservice-oriented architectures leveraging containers are far easier to manage and deploy than monolithic applications. And unlike modules within monolithic architectures, individual microservices can be updated or completely replaced without impacting other application components since they only interact with other services via APIs.

In this age of DevOps, companies embrace the need to deliver features and eliminate technical debt in a continuous development and deployment cycle. Microservice architectures and container-based deployments fit in well with this philosophy. Still, on their own, these methods don’t address critical challenges like scalability or the need for services to function in multiple hosting environments. That’s where the need for orchestration comes in. While containers provide microservice-based applications a self-contained execution and deployment environment, container orchestration automates the deployment, scaling, management, and networking of containers in any environment where they function.

Kubernetes Overview

Google first developed Kubernetes (K8s) to address the growing need for cloud-native application frameworks. Google later donated it to the Cloud Native Computing Foundation, where it became one of the most prolific open source projects in history.

Kubernetes is an open-source platform that automates container operations by eliminating the manual processes involved in deploying and scaling containerized applications. It allows developers to organize containers hosting microservices into clusters, handles scaling, and automates failover procedures. In essence, it simplifies the work required to manage containers while also providing the capability to run distributed systems with a high level of resiliency. Here’s a run-down of the high-level features of Kubernetes:

- Automated deployments and rollbacks– Developers can define the desired end state for deployed containers, and Kubernetes will ensure that all containers maintain that state. In this example, Kubernetes will replace or restart any containers that go down, shift resources between containers, or remove containers from the configuration until the end state matches the desired state.

- Automated discovery and load balancing– Kubernetes can expose a container with an IP address or domain name and distribute network traffic across containers as needed to maintain performance.

- Automated resource management- Developers can provide Kubernetes with a cluster of nodes containing containers and assign the desired CPU and RAM that each container should use. Kubernetes will fit containers across the node cluster as needed to optimize resource usage.

- Storage Orchestration- Developers can use Kubernetes to automatically mount disparate storage systems (local, cloud, network) for their applications.

Kubernetes Architecture

When a developer deploys Kubernetes, it results in the creation of a cluster. A Kubernetes cluster contains a set of machines called nodes that host containerized applications. These nodes host the Pods; the smallest deployable computing units created and managed in Kubernetes. The control plane oversees the nodes and the Pods in the cluster. Here is a high-level breakdown of the components that make up a Kubernetes cluster:

- Nodes- In Kubernetes, a node is a virtual or physical machine that runs workloads. Each node contains the services necessary to run pods:

- Container runtime– The software responsible for running containers.

- Kubelet– An agent that runs on each node in a cluster. It ensures that the containers running in a pod are in a healthy state.

- Kube-proxy– A network proxy service that runs on each node in a given cluster. It maintains network rules on nodes that allow network communications to pods from within or outside the cluster.

- Pods- A pod consists of containers and supplies shared storage, network resources and specifies how the containers will run. A pod’s contents are always co-located and co-scheduled and contain one or more tightly coupled containers that must execute on the same logical host. There are two primary ways to use pods in Kubernetes:

- Pods run a single container: In the most common use case, the pod acts as a wrapper around a single container allowing Kubernetes to manage each pod rather than individual containers.

- Pod runs multiple containers dependent on one another: In this case, the pod encapsulates an application composed of multiple containers that need to share resources.

- Control Plane- The Control Plane is responsible for maintaining the desired end state of the Kubernetes cluster as defined by the developer. It consists of the following components:

- Kube API Server– Exposes the Kubernetes API and acts as the front end for the control plane.

- etcd– Acts as a high-availability key-value store used as a backing store for all cluster data.

- Kube-scheduler– Listens for newly created pods and assigns them to a node based on decision configurations such as resource requirements, policy constraints, and locality specifications.

- Kube Controller Manager– Runs controller processes for the control plane. These include:

- Node Controller– Detection and response for when nodes go down.

- Job controller– Creates pods for one-off tasks to run on.

- Endpoints controller– Connects services and pods.

- Service Account and Token Controller– Creates default accounts and API access tokens.

- Cloud Controller– Embeds cloud-provider-specific control logic into the control plane and allows developers to link a cluster into a cloud provider’s native API.

The Kubernetes API

The Kubernetes API sits at the core of how cloud-native applications are managed and modeled with Kubernetes. It ensures well-defined, straightforward bi-directional communications between end-users, different parts of the cluster, and external components. In conjunction with the Kubernetes operator, it provides the method of packaging, deploying, and managing an application in production.

A Breakthrough Cloud-Native Application Platform

Kubernetes is a breakthrough platform providing cloud-native application management through orchestration, designed from the ground up to support DevOps deployments leveraging containers and Microservices. Manually managing the pods on each node at scale would be virtually impossible without the robust control, automation, and orchestration that Kubernetes provides. Countless organizations leverage Kubernetes in their IT departments and development teams to quickly deliver highly resilient, scalable applications to end-users with far less risk of accruing technical debt over time relative to monolithic methodologies. As Kubernetes adoption becomes even more widespread, the demand for development/lifecycle management solutions and comprehensive cloud-native application security will only continue to increase.